Earlier this year, Samsung had unveiled the world’s first HBM-PIM (High-Bandwidth Memory Processing-In-Memory) modules with integrated processing. This new memory technology offers faster performance for AI applications while reducing power consumption. Now, the South Korean firm has announced that it will expand this DRAM technology to more applications.

During Hot Chips 33, an annual expo where brand new semiconductor technologies are unveiled, Samsung Semiconductor announced the first commercial application of HBM-PIM. This futuristic technology has been tested in the Xilinx Virtex Ultrascale+ (Alveo) AI accelerator, where it delivered 2.5x faster processing while offering 60% improved power efficiency. This shows that HBM-PIM is ready for a wider range of applications, including commercial servers and mobile devices.

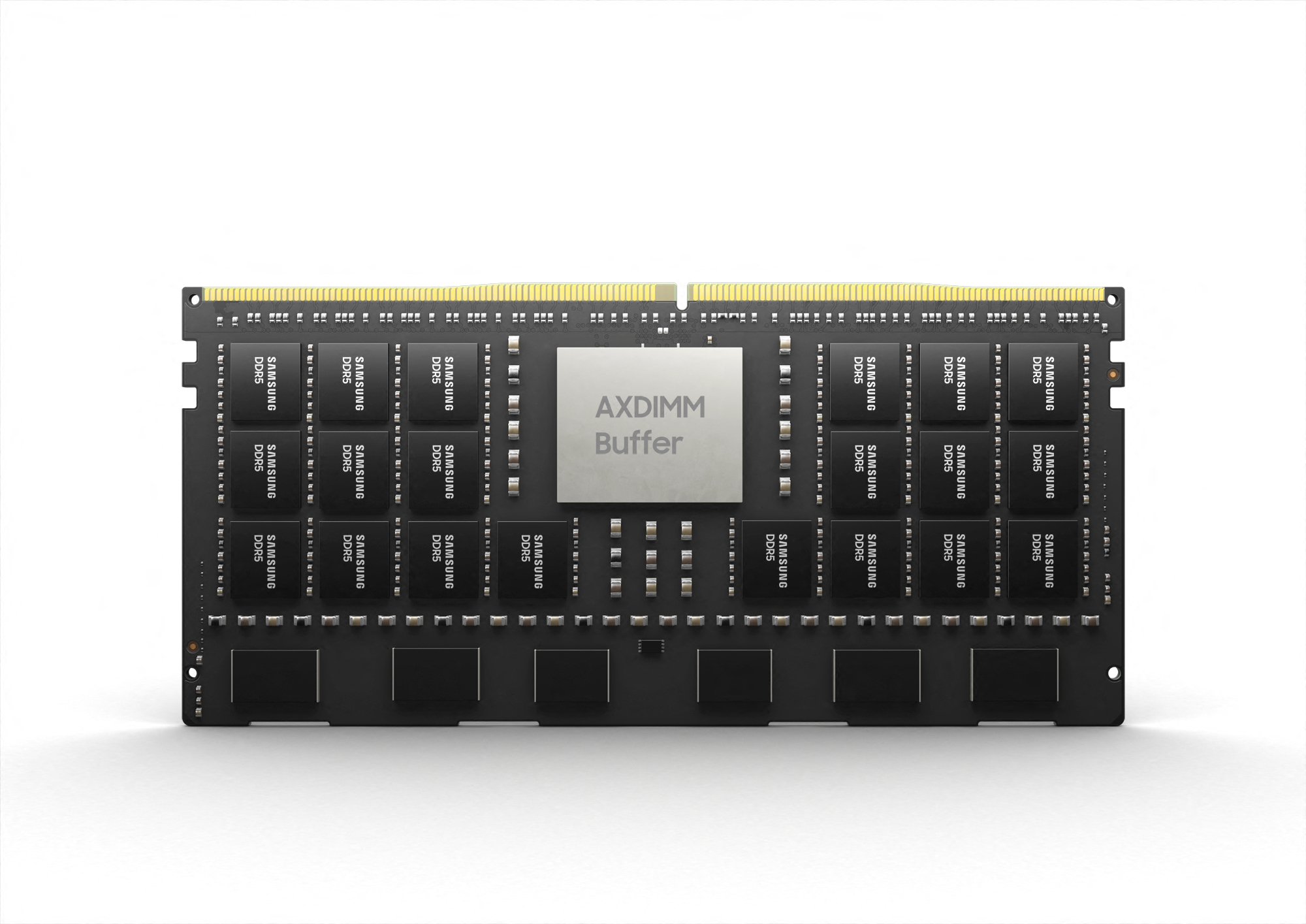

AXDIMM (Acceleration DIMM) are DRAM modules powered by HBM-PIM chips, and they include both memory chips and processing cores. This combination reduces large data movements between CPU and DRAM. The AI engine built inside the buffer chip can perform parallel processing of multiple memory ranks, greatly improving speed and power efficiency.

These AXDIMM modules retain the conventional DIMM form factor, which means traditional DIMMs can be easily replaced with AXDIMMs. They are currently being tested on customer servers, and it has been noted that they offer twice the performance in AI-based recommendation applications while offering 40% improved power efficiency. SAP has been testing AXDIMM to offer improved performance on SAP HANA and to accelerate the database.

Samsung also announced that it would soon bring processing-in-memory to mobile devices through LPDDR5-PIM modules. These modules offer twice the performance in certain AI tasks such as chatbot, translation, and voice recognition. The world leader in the memory segment plans on standardizing the PIM platform in the first half of 2022 by working with other industry leaders.

Nam Sung Kim, Senior Vice President of DRAM Product & Technology at Samsung Electronics, said, “HBM-PIM is the industry’s first AI-tailored memory solution being tested in customer AI-accelerator systems, demonstrating tremendous commercial potential. Through standardization of the technology, applications will become numerous, expanding into HBM3 for next-generation supercomputers and AI applications, and even into mobile memory for on-device AI as well as for memory modules used in data centers.“

The post Samsung expands its HBM-PIM memory to more applications appeared first on SamMobile.

from SamMobile https://ift.tt/2UIobcN

via IFTTT

ليست هناك تعليقات:

إرسال تعليق